This is an interface to both SVD-based (default) and RGCCA-based GCA (wrapping the

RGCCA::rgcca function)

Arguments

- X

listof input blocks.- ncomp

integernumber of components to extract, either single integer (equal for all blocks), vector (individual per block) or 'max' for maximum possible number of components.- svd

logicalindicating if Singular Value Decomposition approach should be used (default=TRUE).- tol

numerictolerance for component inclusion (singular values).- corrs

logicalindicating if correlations should be calculated for RGCCA based approach.- ...

additional arguments for RGCCA approach.

Value

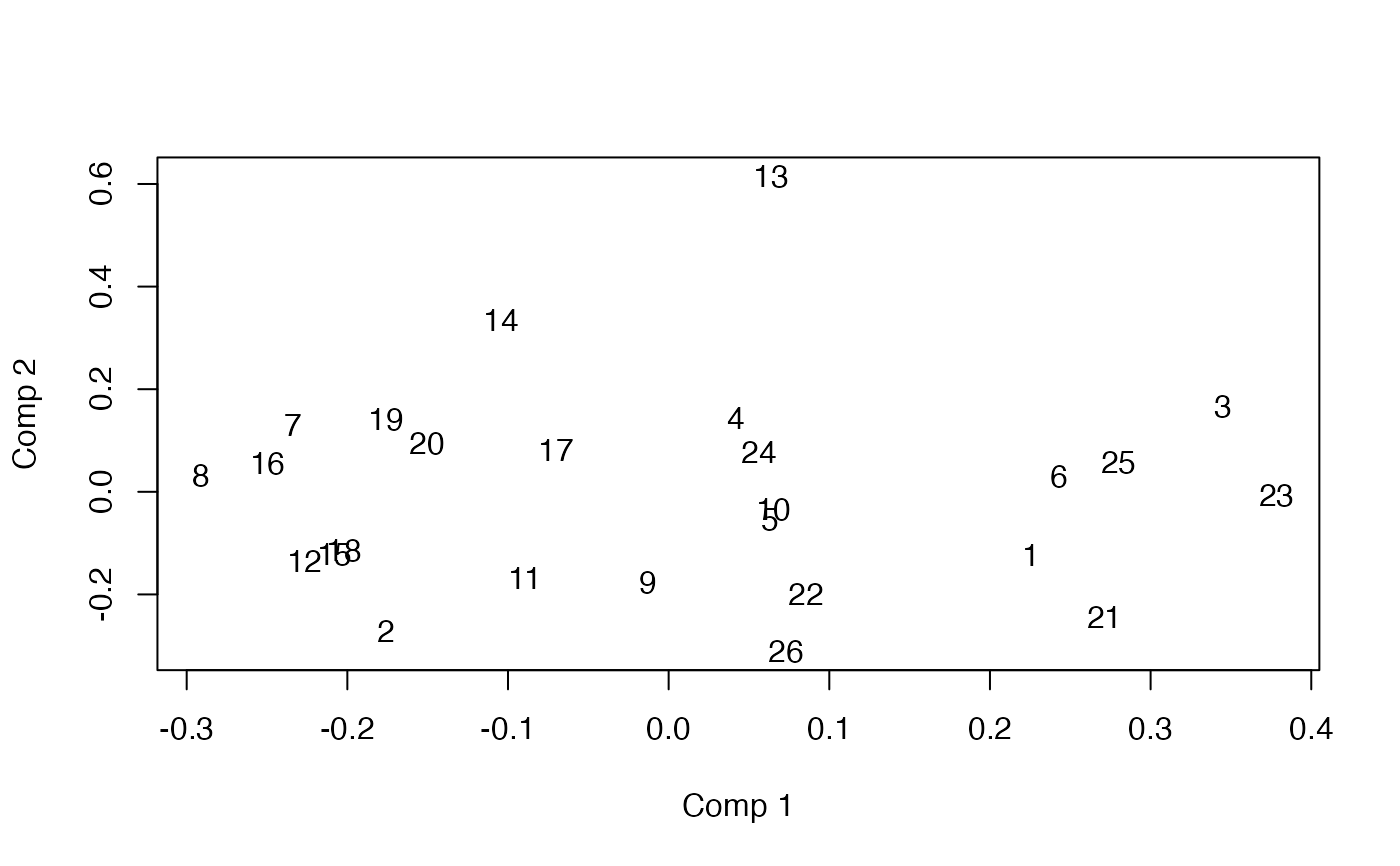

multiblock object including relevant scores and loadings. Relevant plotting functions: multiblock_plots

and result functions: multiblock_results. blockCoef contains canonical coefficients, while

blockDecomp contains decompositions of each block.

Details

GCA is a generalisation of Canonical Correlation Analysis to handle three or more

blocks. There are several ways to generalise, and two of these are available through gca.

The default is an SVD based approach estimating a common subspace and measuring mean squared

correlation to this. An alternative approach is available through RGCCA. For the SVD based

approach, the ncomp parameter controls the block-wise decomposition while the following

the consensus decomposition is limited to the minimum number of components from the individual blocks.

References

Carroll, J. D. (1968). Generalization of canonical correlation analysis to three or more sets of variables. Proceedings of the American Psychological Association, pages 227-22.

Van der Burg, E. and Dijksterhuis, G. (1996). Generalised canonical analysis of individual sensory profiles and instrument data, Elsevier, pp. 221–258.

See also

Overviews of available methods, multiblock, and methods organised by main structure: basic, unsupervised, asca, supervised and complex.

Common functions for computation and extraction of results and plotting are found in multiblock_results and multiblock_plots, respectively.